Dall-E 3 represents the third iteration of the Dall-E text-to-image model developed by OpenAI. If you're not familiar with this company, they're also behind the famous ChatGPT large language model.

The release of Dall-E 3 marked a very important moment in the world of artificial intelligence because it showed a major improvement from the previous iteration. Up until that point, no other model was able to generate images that were of similar quality to that of Midjourney and Stable Diffusion. Dall-E matches that quality (and sometimes even produces better results), while also providing unique benefits.

It can be quite confusing to use a text-to-image model like Midjourney if you have no prompting experiences. To create images that stand out, you need to write really good prompts. These prompts need to include instructions like a specific art style, aesthetic, camera view, lighting, etc. One of the things I like the most about Dall-E 3 is that you can use a conversational tone to instruct the model to generate images.

I'm getting too ahead of myself. I'll discuss these intricacies later on in the article. Let's first talk about what Dall-E 3 is and how you can access it.

What Is Dall-E 3?

Dall-E is a text-to-image AI model. If that simple definition confuses you, I'll elaborate further. It's essentially a tool powered by artificial intelligence that can transform a text description into an image.

The first iteration of the Dall-E model was released back in January 2021. In my honest opinion, it wasn't very good. But that's because the technology just wasn't there yet. The amount of progress that has been achieved since has been nothing short of incredible.

I've seen a ton of people debate whether Dall-E 3 or Midjourney is the better text-to-image model. I don't pick favorites. I honestly like them both. If Dall-E 3 was released back when I first started using these AI-powered tools, I would probably like it better. However, the model I've used the most is without a doubt Midjourney. And I've gotten quite good at writing prompts for it.

How Much Does Dall-E 3 Cost?

I know that some people use the Bing Image Creator to make images with Dall-E 3. Although this is definitely an approach that you can take to make these images for free, it involves waiting a long time for each image to be generated. If you want to produce images faster, you'll have to subscribe to ChatGPT Plus.

ChatGPT plus costs $20 per month and it gives you access to GPT4 (which has access to the internet so that all the information it provides is up to date), access to Dall-E 3, as well as priority access to new features and improvements. This is a fair price considering everything that you get from it.

How to Write Prompts for Dall-E 3

The thing I like the most about Dall-E 3 is the fact that you can use a conversational tone to write prompts and get good results. This makes sense since the company that created this model also made ChatGPT.

Since the best way to access Dall-E 3 is through ChatGPT plus, you're essentially sending messages through ChatGPT to get your images generated. You can actually have conversations with the model, and ask it to make certain changes to an image every step of the way.

With that being said, the process of writing prompts in Dall-E 3 can be incredibly simplified. This is not possible to do with other text-to-image models (at the time of writing this article), so this is a nice change of pace. I personally don't mind writing prompts in a style that I'd use in Midjourney because I've gotten used to it. But I can see how this simplified process can be useful for beginner's or people who may not want to spend time learning how to write prompts.

Another cool thing about Dall-E 3 is that you can instruct it to generate an image in a conversational tone but then ask it what prompt it wrote to get that result. Let me show you how that works with an example. I wrote the following message and sent it to ChatGPT 4:

"I want you to create an image of a comedic pictorial mark logo that shows a squirrel holding a big bag of acorns"

This is what the AI model created from the instructions that I sent it.

The image looks great, especially considering that I just gave an incredibly basic description to the AI model. Of course, my instruction is not the same as what the model used to create the image. My instruction only provided an instruction for the model to write a prompt that will be used to generate the image.

If you're new to tools powered by artificial intelligence, then what I just described above might be a bit too difficult to understand right now. But let me show you what I mean. After Dall-E 3 generated the image you see above, I asked it to share the prompt that it used to create it.

The AI model provided a response that featured the exact prompt that was used to create the image. As you can see, this is what I mean when I say that your instruction and the prompt can be two very different things. However, that's only if you decide not to write a prompt yourself.

I believe that the Dall-E 3 works best when you actually write a prompt and instruct the model to use it exactly as you've written it. I'm going to show you what type of results you can expect from Dall-E 3 when you write prompts yourself.

What I dislike about the prompt the AI model wrote for itself is that it's too long and cluttered with what I'd consider unnecessary details. I like to keep my prompts concise but I always make sure that they contain all relevant details.

Dall-E 3 Prompt Examples

In order to show you what Dall-E 3 is truly capable of, I have to write advanced prompts. In my opinion, this is the only way to get this generative AI model to produce images that truly stand out. Let me show you what I mean with a few different prompt examples.

prompt: cartoon character design, 3D animated, a charming anthropomorphic fox dressed in a detective's outfit suspiciously looking into the distance in the middle of a busy city street, comedic, polished

This is a standard type of prompt that I would write when interacting with text-to-image models like Stable Diffusion and Midjourney. In order to instruct Dall-E 3 to use this exact prompt, all I have to do is begin my message by saying "create an image with the following prompt" before sharing the exact prompt.

The image generation process lasts roughly 15 seconds in most cases. Sometimes, it can take a bit longer but I never experienced it taking more than half a minute. If you're new to AI-powered tools like this one, the prompt you see above may seem complex. However, you get used to writing them quickly and it becomes very easy.

As for the image itself, I think that it looks incredible. When you look at it, it's difficult to tell that it was created by artificial intelligence with nothing more than a text prompt. The attention to detail is stunning, and I like the fact that even keywords like 'comedic' made an impact on the generated result.

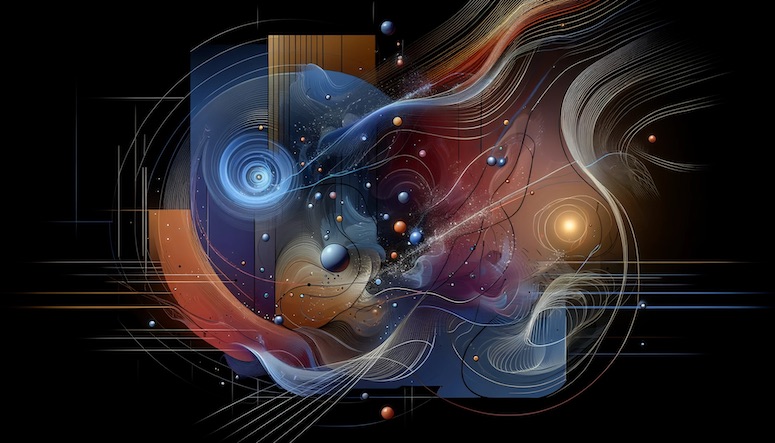

prompt: abstract art, minimalist art style, the flow of information through higher dimensions that humans can't see, aspect ratio 16:9

The default aspect ratio for images created with Dall-E 3 is 1:1, but you can change it for a specific image. You can do this simply by defining what type of aspect ratio you want featured in your prompt.

The art piece generated by the AI model is incredible. You don't need a complex prompt to create something beautiful with AI. However, you do need an idea that's not used too frequently. I personally love to create abstract art using text-to-image models.

prompt: anime art style, darkcore aesthetic, a nameless hero is sitting alone in a dark room and pondering life, he must confront his own demons in order to save the world, grim, dark, peculiar

I'm at a point where I wrote thousands of different prompts and covered so many different ideas and concepts. Nowadays, I often write prompts on autopilot and I never know what I'll come up with. Sometimes, the ideas are playful and joyous while others are dark and grim.

Regardless of the overall theme in this image, it's hard to deny just how good it looks. It perfectly captures what I described. The aesthetic applied to the image is also marvelous. I'm very impressed with how this turned out.

prompt: rock album artwork, a rebel is facing away from the camera and looking at a city ruined by crime, hate, and capitalism knowing that the only way to save the world is through music

There used to be a time when you had to pay good money to get quality artwork for your album or song. Those days are gone thanks to AI-powered tools like Dall-E 3. You can simply describe an artwork design in plain English, and the AI model will create it for you.

This is a concept that obviously blends well with this music genre, which brings me to a very important point. One of the best ways to ensure that you get great results when interacting with text-to-image models is to ensure your prompt fits a certain style/genre/aesthetic.

prompt: fantasy character, 3D animated, RPG video game character, a female druid with a glowing green aura is channeling her inner strength to access her magical abilities, set in an enchanting magical forest

I'm so impressed with how this image looks that I kind of wish that this was an actual character in a video game that I could play right now. This iteration of the Dall-E generative AI model represents a huge leap forward. I don't even have to write a bunch of highly detailed instructions in the prompt to get an image that looks exactly as I imagined it. I just mentioned a few important keywords, and the model knows exactly what to do with them.

Final Thoughts

As a quick summary at the end of this article, I want to reiterate what I consider to be some of the biggest advantages to Dall-E 3. For one, you can use natural language and actually engage in conversation with the AI model to produce an image that you want. This is excellent for beginners who might not be used to writing prompts for generative AI models like Midjourney and Stable Diffusion.

Another thing that I like is that you can include text in the images, which at the time of writing this article other text-to-image AI models can't do.

With all of that being said, I do have to mention that you will get superior results from Dall-E 3 if you write advanced prompts. That's the approach I personally use when making images with this AI model. However, I've been using both Midjourney and Stable Diffusion for a long time so I'm used to writing well-structured prompts.